Machine Learning Notes 03

Multivariate Linear Regression

- Hypothesis: \( h(\theta) = \sum_{i=0}^{n}\theta_{i}X_{i} \)

\( X=\begin{bmatrix}x_{0}\ x_{1}\ .\ .\ .\ x_{n}\end{bmatrix}\in \mathbb{R}^{n+1} ;, \theta=\begin{bmatrix}\theta_{0}\ \theta_{1}\ .\ .\ .\ \theta_{n}\end{bmatrix}\in \mathbb{R}^{n+1}\)

Also, \(h(\theta) = \theta ^{ T} X\)

Gradient descent

- Algorithm:

Repeat{

\(\theta_{j}:=\theta_{j}-\alpha\frac{1}{m}\sum_{i=1}^{n}(h_{\theta}(x^{(i)})-y^{(i)})x^{(i)}\)

}

Feature Scaling

- Idea: Make sure fretures are on a similar scale.

- Mean normalization

Replace \(x_{i}\) with \(x_{i}-\mu_{i}\) to make features have approximately zero mean(Do not apply to \(x_{0}\), Which we suppose equals 1)

\(x_{i}:=\frac{x_{i}-\mu_{i}}{s_{i}}(i\neq0)\);

(\(\mu_{i}\) :Average value of \(x_{i}\) in training set, \(s_{i}\) :range(=max-min)(or standard deviation))

Learning Rate

- If \(\alpha\) is too small: slow convergence;

- If \(\alpha\) is too large: \(J(\theta)\) may not decrese on every iteration, may not converge(slow converge also possible);

Normal Equation —Solve for \(\theta\) analytically

- For the input X, add a column on the left in X filled with 1, make up a new X;

- then, \(\theta = (X^{ T}X)^{-1}X^{ T}y\)

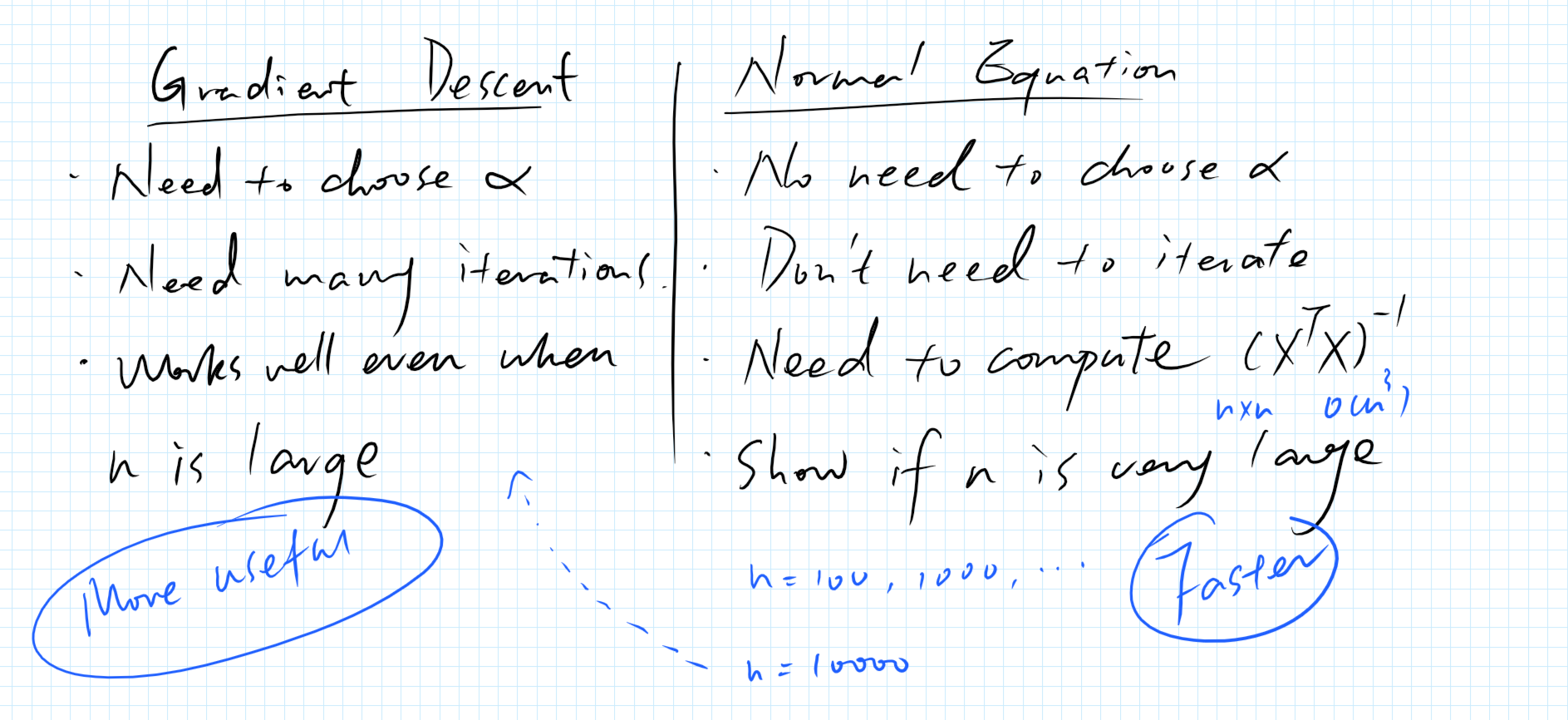

The difference between Gradient Descent and Normal Equation: